For the better part of the past century, elected officials have sought ways to improve the performance of public sector operations, such as fire departments, libraries, health clinics, job training programs, elementary schools, and traffic safety.1 Interest in performance management has only grown over time, to the point today that it is nearly impossible to talk about government finance without also talking about performance. The idea of attempting to measure outcomes and paying for those results is compelling because of its simple logic. Proponents believe setting clear performance goals and tying funding to them will create incentives for public organizations to operate more efficiently and effectively, ultimately resulting in better delivery of public services. Fire departments, they reason, should not be funded according to the number of engines they own, but according to the number of fires they put out. Hospitals should be funded not by the number of patients admitted, but by the health outcomes of their patients. Schools should not be funded by the number of teachers they employ, but by each teacher’s contribution to student learning.

In recent years, advocates seeking to increase the number of college graduates in the United States have promoted the idea that states should finance their public universities using a performance-based model. Supporters of the concept believe that the $75 billion states invest in public higher education each year2 will not be spent efficiently or effectively if it is based on enrollment or other input measures, because colleges have little financial incentive to organize their operations around supporting students to graduation.3 When states shift to performance-based funding, it is hoped, colleges will adopt innovative practices that improve student persistence in college.4 The appeal of performance-based funding is “intuitive,” its proponents argue, “based on the logical belief that tying some funding dollars to results will provide an incentive to pursue those results.”5

However, while pay-for-performance is a compelling concept in theory, it has consistently failed to bear fruit in actual implementation, whether in the higher education context or in other public services.

However, while pay-for-performance is a compelling concept in theory, it has consistently failed to bear fruit in actual implementation, whether in the higher education context or in other public services. Despite the logic, research shows that tying financial incentives to performance measures rarely results in large or positive outcomes that are sustained over time.6

Why doesn’t it work as hoped? One of the earliest investigations of the topic was a 1938 book, Measuring Municipal Activities, by Clarence Ridley and Herbert A. Simon, in which they evaluated performance systems in police and fire departments, libraries, parks, public utilities, and public health organizations.7 Starting with what they expected to be the easiest activity to measure—fire departments—the authors quickly ran into difficult questions about how best to measure performance. They found the seemingly simple task of putting out a fire was actually quite complicated. For example, the bulk of a fire department’s time and resources are not spent putting out fires; rather, it is in planning, practicing, and maintaining equipment in order to be ready for responding to a call. Once a fire occurs, some will be easier to put out than others depending on the size of the fire, type of building structure (residential, industrial, and so on), time of day, weather conditions, and even quality of a department’s equipment. Consequently, answers to basic questions about what counts, how it is counted, and who is responsible for producing an outcome become difficult to answer even in seemingly straightforward contexts. It may be easy to measure whether a fire has been extinguished, but the process through which that outcome was performed varies in complex ways.

Their fundamental conclusion was similar to what we continue to find today: using outcomes as a management tool is difficult because public services are delivered through complex organizations where tasks are not routine and are inherently difficult to define and measure. Notably, Simon later went on to win the Nobel Prize in Economics for developing the theory of bounded rationality, arguing that data generated by performance incentives do “not even remotely describe the process that human beings use for making decisions in complex situations.”8 Performance-based funding regimes are most likely to work in non-complex situations where performance is easily measured, tasks are simple and routine, goals are unambiguous, employees have direct control over the production process, and there are not multiple people involved in producing the outcome.9 In higher education, it may be easy to count the number of graduates, but the process of creating a college graduate is anything but simple.

…Performance-based funding is likely to be effective in only limited circumstances, and that states should instead emphasize capacity building and equity-based funding as alternative policy tools for improving educational outcomes.

This paper applies lessons from performance management literature to the field of higher education, exploring the assumptions behind performance-based approaches to financing. It summarizes research on performance-based funding in higher education, which has generally shown weak evidence of positive impact. The paper concludes that performance-based funding is likely to be effective in only limited circumstances, and that states should instead emphasize capacity building and equity-based funding as alternative policy tools for improving educational outcomes.

Sign up for updates.

What Is Performance-Based Funding in Higher Education?

When states allocate funds to individual colleges or to systems, the largest budget items include faculty and staff salaries and benefits, and campus operations and maintenance. These budgets are often set based upon historical trends and fixed costs, resulting in an incremental approach to budgeting in which the prior year’s budget serves as the primary determinant of the current-year budget.10 While incremental budgeting offers a degree of predictability, it may not be responsive enough to the changing needs of various campuses. This is why many states also embed formula funding into their budget models, where appropriations are based on a number of metrics such as enrollment growth, credit hours taken, and classroom square footage. Incremental and formula funding are the most common ways states allocate funds to higher education, but the reemergence of performance-based funding is changing that landscape.11

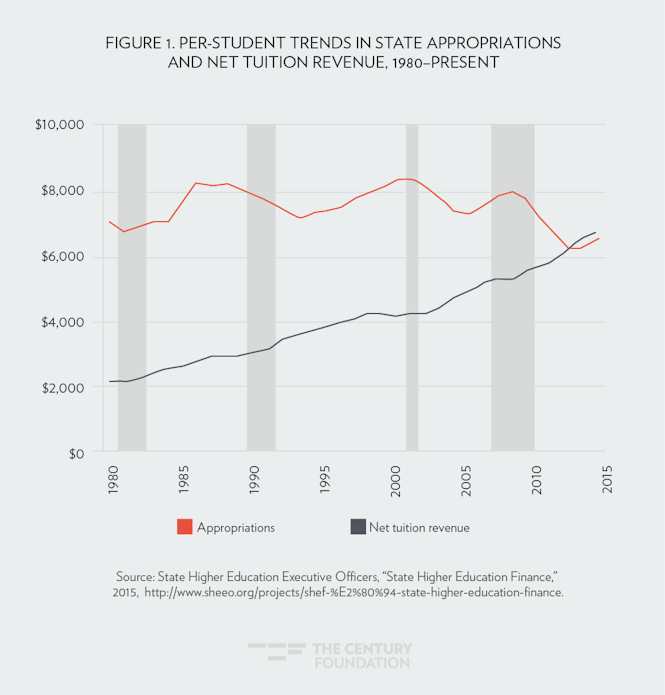

For the past three decades, state spending on higher education has been a shrinking pie. Today, state appropriations per student are lower than they were in the 1980s since state support has failed to keep pace with enrollment demand. As states divest, they have pushed costs onto individual students and families in the form of higher tuition. As shown in Figure 1, public colleges now get more money from students’ tuition dollars than from state appropriations. As a result of these funding trends, there is greater pressure for colleges to show they are making the most of their scarce public support.

The trend toward greater tuition reliance and reduced state support does not bode well for improving college completion for two reasons. First, research consistently shows that a $1,000 increase in tuition is associated with approximately 5 percent lower enrollment.12 As state support declines and tuition rises without being offset by additional financial aid, we can expect fewer students to persist through college. Second, colleges that have fewer resources also have lower graduation rates and students take longer to finish their degrees. State appropriations help colleges serve students by offering better academic support services, lower faculty-to-student ratios, and reducing tuition—all of which are shown to be effective ways to increase degree attainment.13 If a college does not have adequate financial resources to support student success, then it becomes even more difficult to meet performance goals. Many of our nation’s lower-income, working class, and racial/ethnic minority students are enrolled in colleges that have the fewest financial resources, suggesting performance-based funding models could exacerbate inequalities if they do not account for this context.

Performance-based funding has emerged in the context of tight state budgets as a way to encourage efficiency and to make colleges responsible for their own destiny: those that fail to perform will lose more of their funding. Performance-based funding has developed in two distinct waves. The first occurred in the 1990s when eighteen states adopted early versions of performance-based funding. Some of these states (South Carolina) did away with incremental budgeting and used performance formulas to allocate 100 percent of their appropriations. Most others allocated performance funds as a bonus program, where colleges would compete for additional funds that were separate from their base budget. These early programs were popular with legislators, but were discontinued when political parties turned over and economic conditions weakened in the early 2000s. Consequently, several states discontinued their policies throughout the early 2000s, with only a handful keeping the policy in place.

The second wave of performance-based funding began around 2010 when several states adopted (or readopted) new versions of the old policy, as shown in Figure 2.

Download

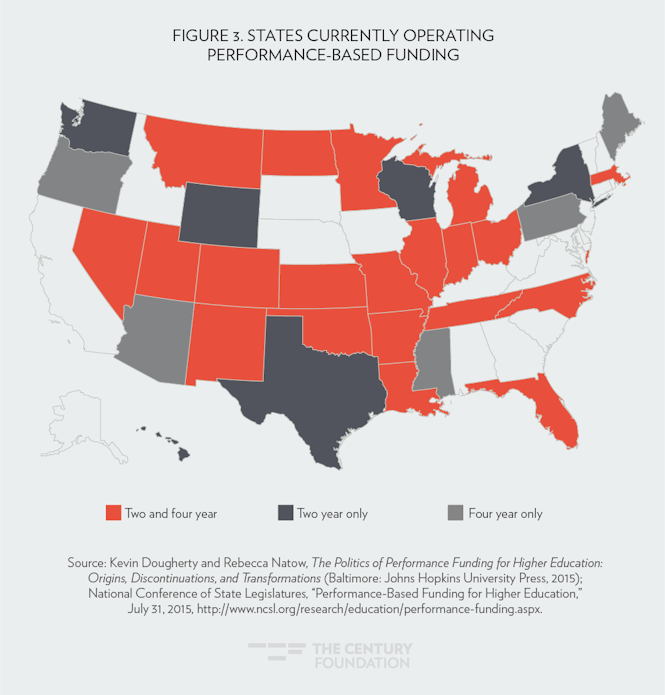

Today, thirty-two states (see Figure 3) operate with performance-based funding policies for their public institutions of higher education. The resurgence of this policy is remarkable considering the history of performance-based funding, in which two-thirds of all states that experimented with the policy discontinued it at some point in time.14 The resurgence in recent years may suggest states have learned from past experiences—perhaps old efforts failed because of design flaws—and new models will yield more effective and sustainable outcomes. The old models did not prioritize degree completion, funds were typically small and only came as bonuses (rather than built into the base), performance metrics were either too vague or too varied, and states rarely rewarded intermediate success. Further, the old models did not differentiate across the diversity of missions and educational offerings.

The more recent round of performance-based reforms have been rebranded by advocates as “outcome-based,” and are supposed to be guided by seven principles, according to a firm providing assistance to many of the states employing performance-based funding:15

- Align incentives with state priorities

- Focus on completion

- Prioritize traditionally underserved students

- Hold all sectors accountable to the policy

- Differentiate metrics by mission and sector

- Tie significant amounts of funding to performance

- Build funding into base budget, then phase-in

By following these principles, the advocates argue, state performance-based funding efforts will create the conditions where colleges now produce significantly more college graduates. By focusing attention on completions, the logic goes that colleges will adopt strategies for improving student outcomes while also “aligning institutional spending priorities with those of the state.”16

Recognizing the importance of a flexible approach that acknowledges the ways that needs vary across campuses, the strategies that emerge from performance-based funding will vary from campus to campus, depending on each college’s financial capacity and resources available to develop new programs or improve existing ones. For example, some campuses might use technology and predictive analytics to identify and reach out to students who are struggling academically. Other campuses might provide new ways to deliver development education or allocate financial aid in order to retain and graduate more students. The theory is that by being clear about the goal, the experts at the campus level can figure out how to get there, incentivized by the funding tied to the goal.

It Isn’t Working—Why Not?

Despite the compelling logic behind paying for performance in higher education, research comparing states that have and have not adopted the practice has yet to establish a connection between the policy and improved educational outcomes. To date, there are twelve quantitative evaluations of state performance-based funding (see Table 1). There is remarkable consistency in the findings, all of which were conducted using different research techniques, spanning different periods of time, and examining various policy outcomes. Researchers typically examine how the policy affected graduation rates or the total number of degrees and certificates produced each year. These are the ultimate outcomes of performance-based funding, yet researchers have also examined intermediate outcomes like retention rates, selectivity, and resource allocation.

| TABLE 1. SUMMARY OF QUANTITATIVE RESEARCH ON EFFECTS OF PERFORMANCE-BASED FUNDING | ||||

| Authors* | Outcome | Years studied | Effects on outcome | |

| 1 | Shin & Mitlon (2004) | Graduation rates | 1997-01 | Null |

| 2 | Volkwein & Tandberg (2008) | Accountability score | 2000-06 | Null |

| 3 | Shin (2010) | Graduation rates & research funds | 1997-07 | Null |

| 4 | Sanford & Hunter (2011) | Graduation & retention rates | 1995-09 | Null |

| 5 | Rabovsky (2012) | Revenues & expenditures | 1998-09 | Mix, mostly null |

| 6 | Radford & Rabovsky (2014) | Graduation rates & degrees | 1993-10 | Null, sometimes negative |

| 7 | Hillman, Tandberg, & Gross (2014) | Bachelor’s degrees | 1990-10 | Null |

| 8 | Tandberg & Hillman (2014) | Bachelor’s degrees | 1990-10 | Null, some + over time |

| 9 | Tandberg, Hillman & Barakat (2015) | Associate’s degrees | 1990-10 | Mix, mostly negative |

| 10 | Hillman, Tandberg, & Fryar (2015) | Associate’s degrees & certificates | 2002-12 | More short-term certificates |

| 11 | Umbricht, Fernandez & Ortagus (2015) | Degrees, diversity, & admissions | 2003-12 | Null, more selective, less diverse |

| 12 | Kelchen & Stedrak (2016) | Revenues, expenditures, & financial aid | 2003-12 | More merit aid, less Pell aid |

| Source: Compiled by author from the studies listed in note 17 | ||||

Across this body of research, the weight of evidence suggests states using performance-based funding do not out-perform other states—results are more often than not statistically significant. The most instructive findings come from case studies of Indiana, Pennsylvania, Tennessee, and Washington, all of which based their policies on the seven principles identified by advocates. In Indiana, universities have become more selective and less diverse while also not improving degree production. In Pennsylvania, universities did not produce more degrees even after operating under performance-based funding for nearly a decade. After Tennessee increased the financial incentives and redesigned its policy, universities did not improve their graduation or retention rates. And in Washington, the state’s community colleges responded not by producing more associate’s degrees but by increasing short-term certificates. Despite each state having goals related to improving college completions, their performance-based funding policies have not yet achieved the desired results.

Studies that use national samples rather than state-specific cases arrive at similar conclusions. In most of these national studies, states employing performance-based funding either decreased their degree productivity or they simply do not out-perform other states. In some cases, colleges responded to performance-based funding by enrolling fewer low-income students while spending more on non-needy students. Despite the weight of evidence pointing largely to null or negative effects, one study found positive effects on degree completions after several years of implementation. After about seven years, states using performance-based funding produced about 0.05 standard deviation more bachelor’s degrees than other states. While positive, this effect size is quite small and delayed when compared to other interventions that have larger and more immediate impacts on degree completion.

In 2015, states actually saw fewer students graduate from college than in previous years despite the fact that most states provide incentives for colleges to improve performance. How could this be?

In 2015, states actually saw fewer students graduate from college than in previous years despite the fact that most states provide incentives for colleges to improve performance. How could this be? How could educational attainment actually drop when the majority of states have created incentives to do just the opposite? Interestingly, the findings presented above are consistent with other performance management literature, in which performance regimes have been characterized as a “triumph of hope over experience” and results often do not follow from performance incentives.18 This is likely due to flawed assumptions embedded in the pay-for-performance logic.

To begin, proponents believe that traditional input-oriented funding models provide little to no incentive to increase completion. They claim colleges will underperform in the absence of incentives and that “public finance literature undergirds the idea that incentives and alignment to objectives matter.”19 Still others argue that “colleges and universities have had few financial incentives to prioritize student success.”20 From this perspective, states that never adopted performance-based funding should produce graduates at far lower rates than that of states using performance-based funding. But the evidence presented earlier shows that states without performance-based funding produced degrees on par with (and sometimes better than) those using performance-based funding. Even in the absence of explicit performance goals and financial incentives, colleges increased degree completions when provided with additional resources.

In other words, public sector organizations can indeed produce positive outcomes even when financial incentives are not present. In fact, there are many cases in which performance declined when high-stakes performance incentives were introduced into complex organizations. When hospitals moved toward performance pay models, they did not improve health outcomes for patients. Despite the financial incentive, surgeons became more likely to avoid sicker patients, have higher rates of misdiagnosis, and even cancel operations or extend wait times.21 In elementary education, where the goal was to increase test scores, teachers became more likely to teach to the test in response to high-stakes performance accountability.22 In workforce development, local job placement centers had the goal of improving employment stability but did not significantly improve the labor market outcomes for displaced workers even when the incentive system encouraged long-term outcomes.23

The Assumptions Don’t Match the Reality

For the logic of performance-based funding to result in actual improved outcomes, there are at least three assumptions that must hold true: the incentives must encourage low-performing institutions to improve, there must be a clear pathway for achieving better results, and the changes must be sustainable. As explained below, in higher education, none of these assumptions hold true.

Assumption 1: Incentives encourage low-performing institutions to improve.

One of the most common themes found in the qualitative evaluations of higher education performance-based funding is that low-resourced colleges struggle to meet performance goals. Consequently, they may lose funding and actually have less capacity to make educational improvements. This funding loss can result in a performance paradox in which states demand performance, yet do not provide colleges with the resources to perform. As a result, high-performers may be the most likely to benefit and low-performers may struggle to keep pace. To the extent this occurs, it would only exacerbate existing inequalities in the postsecondary finance system.

These inequalities have emerged in other fields. For example, high-achieving and wealthier K–12 schools have been found to excel in state performance accountability systems.24 Similarly, schools that already had high accountability ratings were more likely to receive funds and thus achieve even greater improvement.25 Examples are not limited to education: hospitals and health care providers that were already performing well were in strong financial shape consistently outperformed others.26 In higher education, it is likely that the colleges already performing well will have the resources necessary to respond and adapt to the performance regime. Those with the least resources may struggle to respond if they do not have the staffing, experience, or financial capability to adopt or implement new retention and completion initiatives. In order to give colleges an equal chance at competing for performance funds, it is necessary to ensure colleges are competing on equal footing where those with the fewest resources are not unfairly penalized for not having the capacity to respond. Even if a funding formula differentiates according to mission or enrollment profile, it is important to assess whether the institution has the necessary resources (financial, personnel, technological, and so on) to implement effective practices to improve performance.

Assumption 2: There is a clear pathway for achieving results.

Incentive regimes work best when tasks are routine, non-complex, and when there is only one principal and one agent involved in delivering a service. In this environment, a manager is able to design and enforce a performance contract with an employee: if the employee does not perform, they do not get paid. This performance model has been found to work well in some industries, such as the classic example of windshield installation, where agents have direct and unambiguous control over the production process.27

However, in public sector organizations the tasks are rarely routine or non-complex, and there is rarely just one principal and one agent involved in delivering a service. Students interact with any number of administrators, faculty members, and peers on a daily basis, meaning that the production of a college graduate is a collaborative task in which no single person is responsible for achieving a goal on their own. Unlike installing a windshield, the process is neither automated nor under the direct and unambiguous control of a single person. In fact, windshield installers may find the external incentives to motivate their behaviors, while college administrators and faculty members may be more intrinsically motivated to perform. Two decades of research on public sector motivation show that high-stakes external pressure can actually “crowd out” intrinsic motivation, reducing the likelihood of performance.28 In this context, weak financial incentives are preferable to high-stakes incentives.

To complicate the task even further, the pathway from policy goal to policy outcome is not linear. Even straightforward goals are actually quite ambiguous to achieve. For example, getting a student to graduate from college seems straightforward—they simply need to accumulate enough credits over time and be in good academic standing to receive a degree. But in reality, there are a number of pitfalls along the way that can deter a student from completion, just as there are a number of people on campus (faculty member, staff, administrator, and so on) involved in the student’s ultimate success. For a performance-based funding system to work, it would need to isolate each individual’s unique contribution to the ultimate outcome. How to achieve this without crowding out public service motivation and in a way that can disentangle the value-added of one individual over any other is unclear and not without drawbacks.

Assumption 3: Effects will be sustained over time.

Proponents often refer to performance-based funding as a “game changer” that will usher a new era of success for public higher education.29 However, experience from other sectors shows that when results occur, they are often only short-term and not sustained over time. The most common example comes from evaluations of the federal Job Corps, which initially showed positive impacts but the impacts declined over time.30 These job training and placement centers produced short-term employment results that did not last beyond eighteen months.31 Similarly, hospitals that operated performance-based funding policies saw short-term impacts that, within about five years, began to decline.32

One of the leading reasons results do not last over time is because the data generated from performance regimes may not be useful in professional practice. While there is some evidence that colleges are using performance data, it occurs in uneven ways depending on campus cultures and capacities.33 This means performance regimes likely will not change internal operations in ways that induce long-term change. To change these internal operations, states should pursue training of campus officials so they are better able to use data to guide decision-making. But before trying to change internal operations, it is important for states and colleges to have a good sense of what precisely is the problem in need of change and exactly what data is needed to help solve that problem.

A Way Forward

Taken together, each assumption has some degree of face validity that intuitively appeals to how policymakers think colleges and universities will respond to performance incentives. But in light of the research findings both inside and outside of higher education, there is good reason to be skeptical of each assumption since they may not hold true when it comes to increasing educational outcomes. To date, there is little empirical or theoretical support behind performance-based funding in higher education, yet states continue to adopt and expand their efforts even when the weight of evidence suggests performance-based funding is not well suited for improving educational outcomes. Fortunately, there a more promising direction states could adopt to achieve better results.

Colleges that have more financial capacity are in the best position to serve students well; in fact, funding per student is one of the strongest predictors of college graduation.

Colleges that have more financial capacity are in the best position to serve students well; in fact, funding per student is one of the strongest predictors of college graduation.34 As states divest from public higher education, they shift the financial responsibility onto students in the form of higher tuition. Rather than stemming this tide, performance-based funding may actually reinforce this race to the bottom in that colleges that have the greatest capacity are those that will be most likely to perform well. If this occurs to a high extent, then financial incentives are a blunt policy instrument not well designed for improving college completions. Instead, states should focus on building the resource capacity of the lowest-performing colleges and then allocate funds according to performance-oriented needs.

A corollary to state financial aid policy may be an instructive way to think about performance-based funding and its consequences. Paying colleges according to how well they perform on various metrics is not dissimilar from the way states allocate “merit-based” financial aid based on students’ academic performance. While merit-based aid is politically popular, it is an inefficient way to allocate resources since it primarily benefits students who would already do well in college regardless of the aid. In a similar vein, performance-based funding is likely to benefit colleges that already have the greatest likelihood to perform well. Instead of allocating scarce financial resources in this way, it would be more efficient and effective to target subsidies to colleges and universities that have the greatest financial need.35

A “need-based” funding model for colleges and universities would target resources to institutions serving the most underrepresented student populations. After all, the problem with college completion is not that elite or highly selective colleges are under-performing, but rather that campus resources are insufficient in many of the public institutions that low-income, working class, and racial/ethnic minority students attend. Building these schools’ capacity to better serve such students would be a far more effective and promising way to increase college completion. Some states using performance-based funding have incorporated diversity into their funding models, but this is bound to be insufficient if diversity and equity is not at the forefront of finance reform. By prioritizing equity, rather than embedding it within a funding formula, states will be in a better position to improve educational outcomes.

Shifting away from this “merit-based” performance regime toward a “need-based” equity-funding system could address many of the shortcomings noted in this paper. By focusing on closing inequalities, building the service capacity of colleges with the fewest resources, and supporting the professional development of professionals involved with educating students, states will be more likely to improve the performance of their public colleges and universities. Experience and evidence shows that this approach would be a more promising strategy for improving college completions. After all, allocating scarce funds to colleges that are already performing well will only reproduce inequalities. Targeting scarce resources to those that have the greatest needs and the least current capacity will likely yield better results. This would usher in a new era of state funding that prioritizes results by prioritizing equity: a radical proposition in a higher education landscape that has for too long rewarded inequality.

This report is the fourth in a series on College Completion from The Century Foundation, sponsored by Pearson. The views and opinions expressed in this paper are those of the authors and do not necessarily reflect the views or position of Pearson.

Notes

- Richard Rothstein, “Holding Accountability to Account: How Scholarship and Experience in Other Fields Inform Exploration of Performance Incentives in Education,” Nashville, TN: National Center on Performance Incentives, 2008, https://my.vanderbilt.edu/performanceincentives/files/2012/10/200804_Rothstein_HoldingAccount.pdf.

- State Higher Education Executive Officers, “State Higher Education Finance,” State Higher Education Finance, 2015, http://www.sheeo.org/projects/shef-%E2%80%94-state-higher-education-finance.

- The traditional approach to funding “does not incent program/degree completion” and encourages colleges to enroll but not retain students. Martha Snyder, “Driving Better Outcomes: Typology and Principles to Inform Outcomes-Based Funding Models,” Washington, D.C.: HCM Strategists, 2015, http://hcmstrategists.com/drivingoutcomes/wp-content/themes/hcm/pdf/Driving%20Outcomes.pdf.

- Stan Jones, “The Game Changers: Strategies to Boost College Completion and Close Attainment Gaps,” Change: The Magazine of Higher Learning 47.2 (2015): 24–29.

- Nancy Shulock and Martha Snyder, “Don’t Dismiss Performance Funding,” Inside Higher Ed, 2013, https://www.insidehighered.com/views/2013/12/05/performance-funding-isnt-perfect-recent-study-shortchanges-it-essay.

- Ed Gerrish, “The Impact of Performance Management on Performance in Public Organizations: A Meta-Analysis,” Public Administration Review (August, 2015).

- Clarence Eugene Ridley, and Herbert A. Simon, Measuring Municipal Activities: A Survey of Suggested Criteria and Reporting Forms for Appraising Administration, International City Managers’ Association, 1938.

- Herbert, E. Simon, “Rational Decision Making in Business Organizations,” The American Economic Review (1979): 493–513. See for more detail: Richard Rothstein, “Holding Accountability to Account: How Scholarship and Experience in Other Fields Inform Exploration of Performance Incentives in Education,” Nashville, TN: National Center on Performance Incentives, 2008, https://my.vanderbilt.edu/performanceincentives/files/2012/10/200804_Rothstein_HoldingAccount.pdf.

- C. Agrawal, “Performance-Related Pay-Hype versus Reality: With Special Reference to Public Sector Organizations,” Management and Labour Studies 37.4 (2012): 337–44; Rothstein, “Holding Accountability to Account.”

- Daniel T. Layzell, “State Higher Education Funding Models: An Assessment of Current and Emerging Approaches,” Journal of Education Finance 33.1 (2007): 1–19.

- James C. Hearn, “Outcomes-Based Funding in Historical and Comparative Context,” Indianapolis, IN: Lumina Foundation, 2015, https://www.luminafoundation.org/files/resources/hearn-obf-full.pdf.

- David Deming and Susan Dynarski, “College Aid,” in Targeting Investments in Children: Fighting Poverty When Resources Are Limited (Chicago: University of Chicago Press, 2010), 283–302, http://www.nber.org.ezproxy.library.wisc.edu/chapters/c11730.pdf.

- John Bound, Michael F. Lovenheim, and Sarah Turner, “Increasing Time to Baccalaureate Degree in the United States,” Education Finance and Policy 7.4 (2012): 375–424;

John Bound and Sarah Turner, “Cohort Crowding: How Resources Affect Collegiate Attainment,” Journal of Public Economics 91.5-6 (2007): 877–99; Marvin A. Titus, “The Production of Bachelor’s Degrees and Financial Aspects of State Higher Education Policy: A Dynamic Analysis,” The Journal of Higher Education 80.4 (2009): 439–68. - Kevin Dougherty and Rebecca Natow, The Politics of Performance Funding for Higher Education: Origins, Discontinuations, and Transformations (Baltimore: Johns Hopkins University Press, 2015).

- Martha Snyder, “Driving Better Outcomes: Typology and Principles to Inform Outcomes-Based Funding Models,” Washington, D.C.: HCM Strategists, 2015, http://hcmstrategists.com/drivingoutcomes/wp-content/themes/hcm/pdf/Driving%20Outcomes.pdf.

- L. Davies, “State ‘Shared Responsibility’ Policies for Improved Outcomes: Lessons Learned,” Washington, D.C.: HCM Strategists, 2014.

-

Jung-Cheol Shin and Sande Milton, “The Effects of Performance Budgeting and Funding Programs on Graduation Rate in Public Four-Year Colleges and Universities,” Education Policy Analysis Archives 12.22 (2004).Fredericks Volkwein and David A. Tandberg, “Measuring up: Examining the Connections among State Structural Characteristics, Regulatory Practices, and Performance,” Research in Higher Education 49.2 (2008): 180–97. Jung Cheol Shin, “Impacts of Performance-Based Accountability on Institutional Performance in the U.S.,” Higher Education 60.1 (2010): 47–68. Thomas Sanford and James M. Hunter. “Impact of Performance-Funding on Retention and Graduation Rates,” Education Policy Analysis Archives 19.33 (2011): 1–30.Thomas M. Rabovsky, “Accountability in Higher Education: Exploring Impacts on State Budgets and Institutional Spending Patterns,” Journal of Public Administration Research and Theory 22.4 (2012): 675–700. Amanda Rutherford and Thomas Rabovsky, “Evaluating Impacts of Performance Funding Policies on Student Outcomes in Higher Education,” The ANNALS of the American Academy of Political and Social Science 655.1 (2014): 185–208.

Nicholas Hillman, David Tandberg, and Jacob Gross, “Performance Funding in Higher Education: Do Financial Incentives Impact College Completions?” Journal of Higher Education 85.6 (2014): 826–57.

David Tandberg and Nicholas Hillman, “State Higher Education Performance Funding: Data, Outcomes and Policy Implications,” Journal of Education Finance 39.1 (2014): 222–43.

David Tandberg, Nicholas Hillman, and Mohamed Barakat, “State Higher Education Performance Funding for Community Colleges: Diverse Effects and Policy Implications,” Teacher’s College Record 116.12 (2015).

Nicholas W. Hillman, David A. Tandberg, and Alisa H. Fryar, “Evaluating the Impacts of ‘New’ Performance Funding in Higher Education,” Educational Evaluation and Policy Analysis (January, 2015).

Mark R. Umbricht, Frank Fernandez, and Justin C. Ortagus, “An Examination of the (Un) Intended Consequences of Performance Funding in Higher Education,” Educational Policy (2015).

Robert Kelchen and Luke J. Stedrak, “Does Performance-Based Funding Affect Colleges’ Financial Priorities?” Journal of Education Finance 41.3 (2016): 302–21.

- Matthew Andrews and Donald P. Moynihan, “Why Reforms Do Not Always Have to ‘Work’ to Succeed: A Tale of Two Managed Competition Initiatives,” Public Performance & Management Review 25.3 (2002): 282.

- Martha Snyder, “Driving Better Outcomes: Typology and Principles to Inform Outcomes-Based Funding Models.” Washington, D.C.: HCM Strategists, 2015, http://hcmstrategists.com/drivingoutcomes/wp-content/themes/hcm/pdf/Driving%20Outcomes.pdf.

- Stan Jones, “The Game Changers: Strategies to Boost College Completion and Close Attainment Gaps,” Change: The Magazine of Higher Learning 47.2 (2015): 24–29.

- Gwyn Bevan and Christopher Hood, “What’s Measured Is What Matters: Targets and Gaming in the English Public Health Care System,” Public Administration 84.3 (2006): 517–38;

Yujing Shen, “Selection Incentives in a Performance-Based Contracting System,” Health Services Research 38.2 (2003): 535–52; Robert M. Wachter, Scott A. Flanders, Christopher Fee, and Peter J. Pronovost, “Public Reporting of Antibiotic Timing in Patients with Pneumonia: Lessons from a Flawed Performance Measure,” Annals of Internal Medicine 149.1 (2008): 29–32; James A. Welker, Michelle Huston, and Jack D. McCue, “Antibiotic Timing and Errors in Diagnosing Pneumonia,” Archives of Internal Medicine 168.4 (2008): 351–56. - Brian A. Jacob, “Accountability, Incentives and Behavior: The Impact of High-Stakes Testing in the Chicago Public Schools,” Journal of Public Economics 89.5-6 (2005): 761–96.

- James J. Heckman, Carolyn Heinrich, and Jeffrey Smith, “The Performance of Performance Standards,” The Journal of Human Resources 37.4 (2002): 778.

- M. Steinberg and L. Sartain, “Does Teacher Evaluation Improve School Performance? Experimental Evidence from Chicago’s Excellence in Teaching Project,” Education Finance and Policy 10.4 (2015): 535–72.

- Steven G. Craig, Scott A. Imberman, and Adam Perdue, “Do Administrators Respond to Their Accountability Ratings? The Response of School Budgets to Accountability Grades,” Economics of Education Review 49 (2015): 55–68.

- Rachel M. Werner, Jonathan T. Kolstad, Elizabeth A. Stuart, and Daniel Polsky, “The Effect of Pay-for-Performance in Hospitals: Lessons for Quality Improvement,” Health Affairs 30.4 (2011): 690–98.

- Edward P. Lazear, “Performance Pay and Productivity,” American Economic Review 90.5 (2000): 1346–61.

- Avinash Dixit, “Incentives and Organizations in the Public Sector: An Interpretative Review.” The Journal of Human Resources 37.4 (2002): 696.

James L. Perry, Annie Hondeghem, and Lois Recascino Wise, “Revisiting the Motivational Bases of Public Service: Twenty Years of Research and an Agenda for the Future,” Public Administration Review 70.5 (2010): 681–90. - Stan, Jones, “The Game Changers: Strategies to Boost College Completion and Close Attainment Gaps,” Change: The Magazine of Higher Learning 47.2 (2015): 24–29.

Peter Z. Schochet, John Burghardt, and Sheena McConnell, “Does Job Corps Work? Impact Findings from the National Job Corps Study,” The American Economic Review 98.5 (2008): 1864–86. - Peter Z. Schochet, John Burghardt, and Sheena McConnell, “Does Job Corps Work? Impact Findings from the National Job Corps Study,” The American Economic Review 98.5 (2008): 1864–86.

- James J. Heckman, Carolyn Heinrich, and Jeffrey Smith, “The Performance of Performance Standards,” The Journal of Human Resources 37.4 (2002): 778.

- Rachel M. Werner, Jonathan T. Kolstad, Elizabeth A. Stuart, and Daniel Polsky, “The Effect of Pay-for-Performance in Hospitals: Lessons for Quality Improvement,” Health Affairs 30.4 (2011): 690–98.

- Kevin J. Dougherty and Vikash Reddy, “Performance Funding for Higher Education: What Are the Mechanisms What Are the Impacts?” ASHE Higher Education Report 39.2 (2013).

- John Bound, Michael F. Lovenheim, and Sarah Turner, “Increasing Time to Baccalaureate Degree in the United States,” Education Finance and Policy 7.4 (2012): 375–424; John Bound and Sarah Turner, “Cohort Crowding: How Resources Affect Collegiate Attainment,” Journal of Public Economics 91.5-6 (2007): 877–99; Marvin A. Titus, “The Production of Bachelor’s Degrees and Financial Aspects of State Higher Education Policy: A Dynamic Analysis,” The Journal of Higher Education 80.4 (2009): 439–68.

- This point benefitted greatly from ongoing conversations with Tiffany Jones at Southern Education Foundation and their work on performance funding found here: http://www.southerneducation.

org/Our-Strategies/Research- .and-Publications/Publications/ Performance-Funding-at-MSIs. aspx